Tutor.mk

Personalised AI learning platform

- Macedonian curriculum

- Local payments

- 320 + students

Open ↗

Personalised AI learning platform

24‑7 voice‑first AI friend

Instant explanations & live avatars

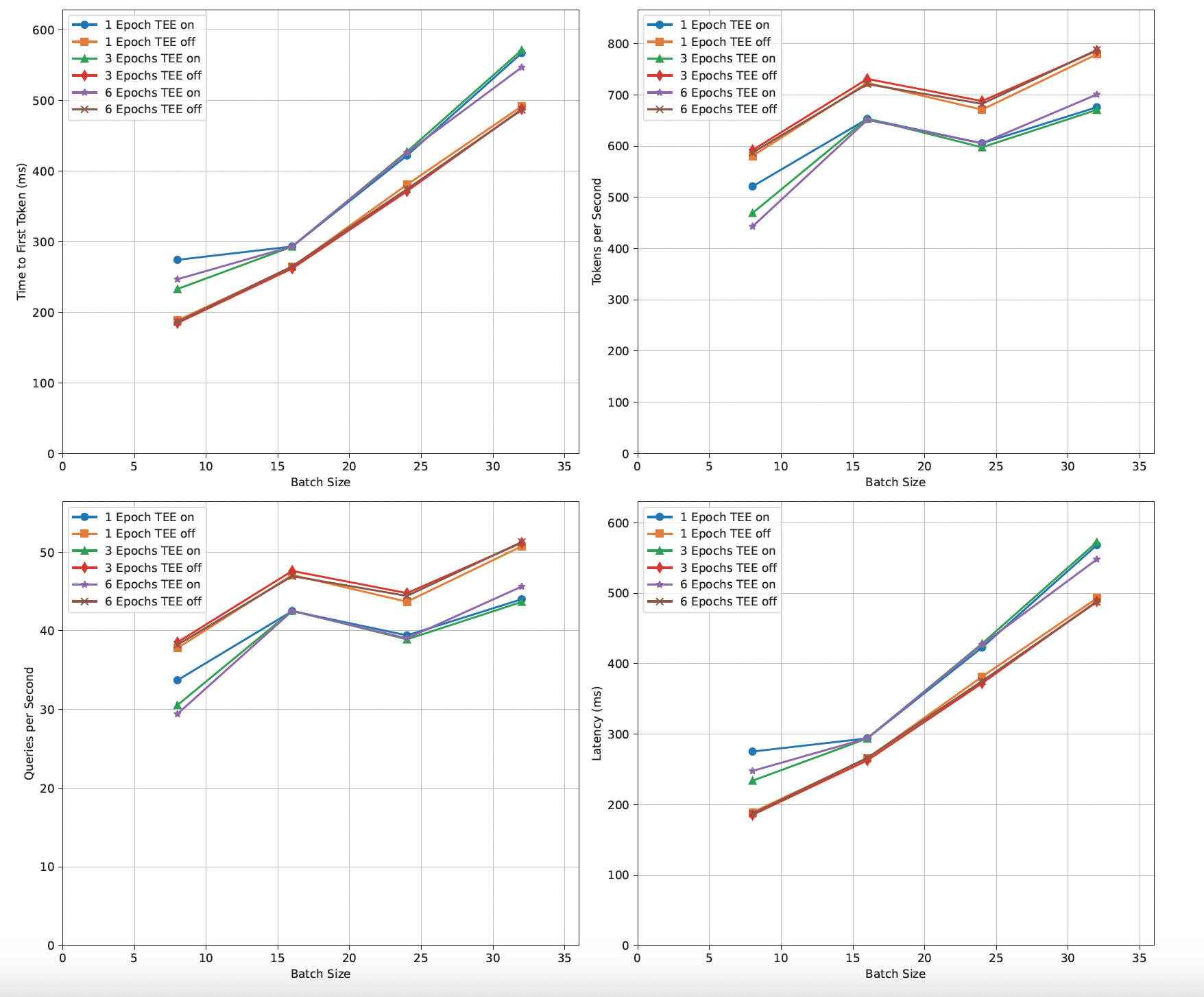

LLM evaluation in VM-based TEEs - under 20 % performance cost on a 110 M-param BERT.

My contribution to the study was a fully reproducible Bash + Python pipeline that (1) launches Kata-Containers under AMD SEV-SNP, (2) runs a parametrised train.py/infer.py for every ⟨batch size, epoch⟩ pair, and (3) ships raw logs for aggregation. The four graphs to the right are auto-generated via Matplotlib as the pipeline's final step.

Net takeaway: a 110 M-param BERT can train & serve entirely inside a VM-based TEE with a predictable 10-16 % tax.

DMs open at @super_bavario or ping me on LinkedIn.